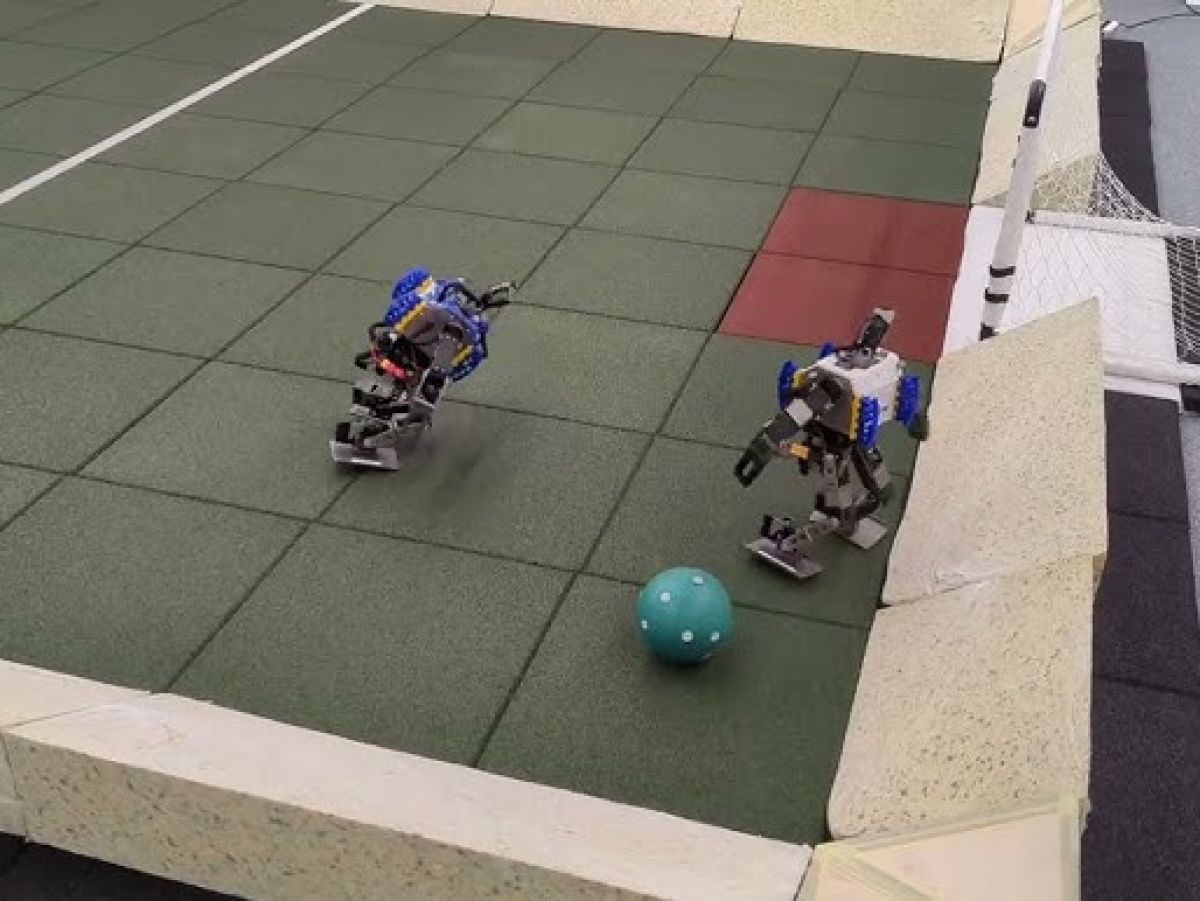

These two soccer players run, walk, go around opponents, fall, get up, shoot and score goals. Nothing very original, except that they are 51 cm tall and 3.5 kg mini-robots from Robotis, equipped with 20 joints and perfectly autonomous.

The machines were trained to play soccer by a team at Google Deepmind using a machine learning method known as reinforcement learning. This project, called OP3 Soccer, is the subject of a magazine article Scientific robotics from April 2024.

Instead of a database, the algorithms used to control the robot learn what to do in a given situation through trial and error. There are no pre-programmed instructions, the algorithm does not know in advance what to do. So there are a lot of mistakes, risky decisions, but every well-executed gesture is accompanied by a reward signal. So the algorithm advances its learning bit by bit, keeping only the actions that have been rewarded.

An algorithm that fumbles for a very long time

This approach is interesting in that it forces the algorithm to select techniques and movements that it records as the most effective, but which may be unexpected or even counterintuitive. Some would never be coded by computer scientists into the instructions given to a pre-programmed robot. “A good example is the way the robot rotates and pivots on the corner of one leg, which would be very difficult to program, but turned out to be more efficient than the traditional approach.” write the authors of the article.

However, reinforcement learning means that you spend a lot of time on it. First, because unlike humans who understand what to do after one or two tries, the algorithm fumbles for a very long time. Then because the training only focuses on one aspect of the activities to be learned. Getting up, shooting, going around an opponent, facing them all require dedicated learning phases.

Therefore, they first take place in a simulation. Algorithms, which are later to be built into the robots, first animate two avatars in a virtual terrain on the screen. This will prevent multiple physical manipulations and possible damage when machines fall (go to the project page Deep mind watch training videos in simulation and real situation).

Camera in your head

The algorithm is then used in a real situation with programmable robots from Robotis. They are equipped with a motion detection system, with a Logitech camera as a head and infrared markers on the body (and on the ball).

However, the transition from simulation to environment is not smooth. For example, for an action consisting of standing up and shooting, the target was on target in 70% of the 50 simulation sessions. In real-world conditions, this score drops to 58% in 50 sessions. So there is a loss of efficiency. “But in most cases the robot remains able to stand up, hit the ball and score,” the researchers emphasize.

In fact, in the long run, the performance of robots trained after reinforcement learning is much more efficient than a robot whose movements have been programmed. The researchers actually conducted comparative tests. The first walks 181% faster than the second, changes direction 302% faster, takes 63% less time to get up after falling, hits the ball 34% faster.

Towards bigger football robots?

A number of limitations remain in the project, which the researchers emphasize. What works well for small robots may not be transferable to larger machines. Seeing with an infrared camera is not always convenient, the marks placed on the ball are sometimes at blind angles, and in the end only the ones above are visible to the cameras. In fact, a dose of additional data-driven learning could also improve machine behavior and performance, especially the transition between a simulated environment and a real-world situation.